Details

It is recommended to purchase UGV Rover PT PI5 ROS2 Kit if you want to use Raspberry Pi as the host controller, or the UGV Rover PT Jetson Orin ROS2 Kit if you want to use NVIDIA Jetson Orin Nano as the host controller

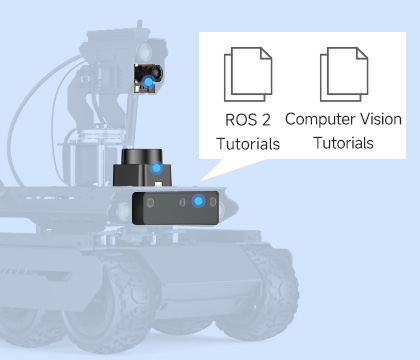

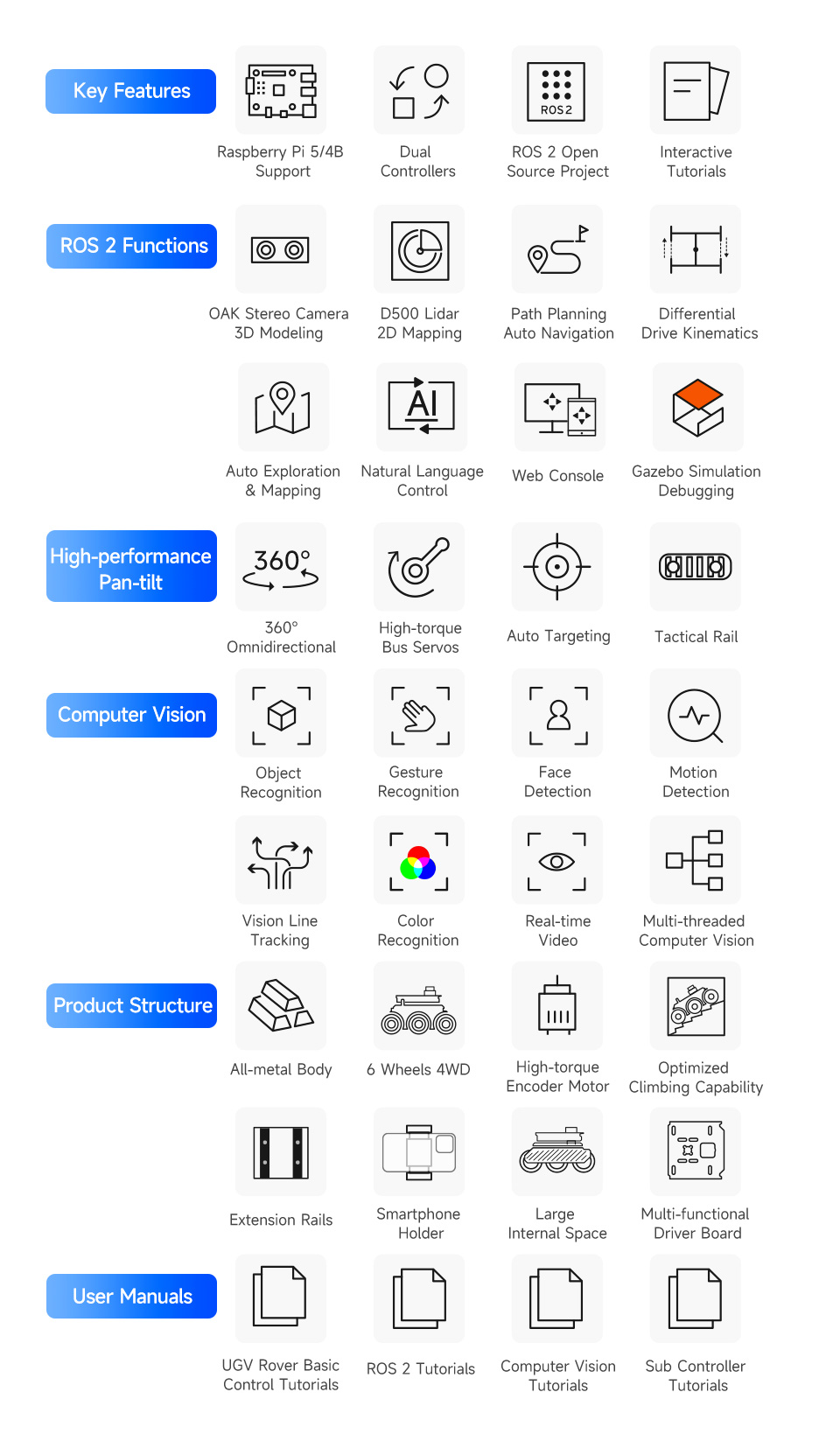

Both kits offer excellent reliability and are equipped with a high-torque 2-axis Pan-Tilt, D500 Lidar, and OAK-D Lite depth camera. We provide comprehensive basic functionalities and ROS2 tutorials to help beginners quickly learn and apply robotics knowledge. The robot kits offer superior expandability, allowing users to deploy various applications.

The product model name includes: chassis type, Pan-Tilt option, host controller model, and function type.

| Model Name | description | Corresponding Module | |

|---|---|---|---|

| Chassis type | UGV Rover | 6 Wheels 4WD chassis |  |

| UGV Beast | Independent suspension off-road tracked chassis |  |

|

| Pan-Tilt option | PT | with 2-axis Pan-Tilt |  |

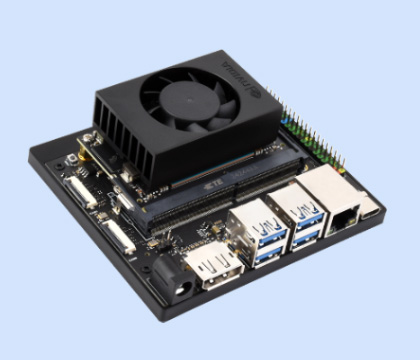

| Host controller | Jetson Orin | Using Jetson Orin Nano as host controller. Note: the kits with "Acce" at the end of the model name do not include the host controller, and require users to prepare their own Jetson Orin board. |  |

| PI 5 | Using Raspberry Pi 5 as host controller. Note: the kits with "Acce" at the end of the model name do not include the host controller, and require users to prepare their own Raspberry Pi 5 and its heatsink. |  |

|

| PI 4B | Using Raspberry Pi 4B as host controller. Note: the kits with "Acce" at the end of the model name do not include the host controller, and require users to prepare their own Raspberry Pi 4B and its heatsink. |  |

|

| Acce | The kits with "Acce" at the end of the model name do not include the host controller, you can choose the Acce Version if you've got a host board. |  |

|

| Function type | AI Kit | Basic AI kit with a 5MP ultra-wide-angle camera, comes with computer vision functionality and tutorials. |  |

| ROS2 Kit | On the basis of the AI Kit, equipped with a D500 360° Lidar and OAK-D Lite depth camera, comes with the complete ROS2 functionality and tutorials. |  |

|

The UGV Rover ROS2 Kit is an AI robot designed for exploration and creation with excellent expansion potential, based on ROS 2 and equipped with Lidar and depth camera, seamlessly connecting your imagination with reality. Suitable for tech enthusiasts, makers, or beginners in programming, it is your ideal choice for exploring the world of intelligent technology.

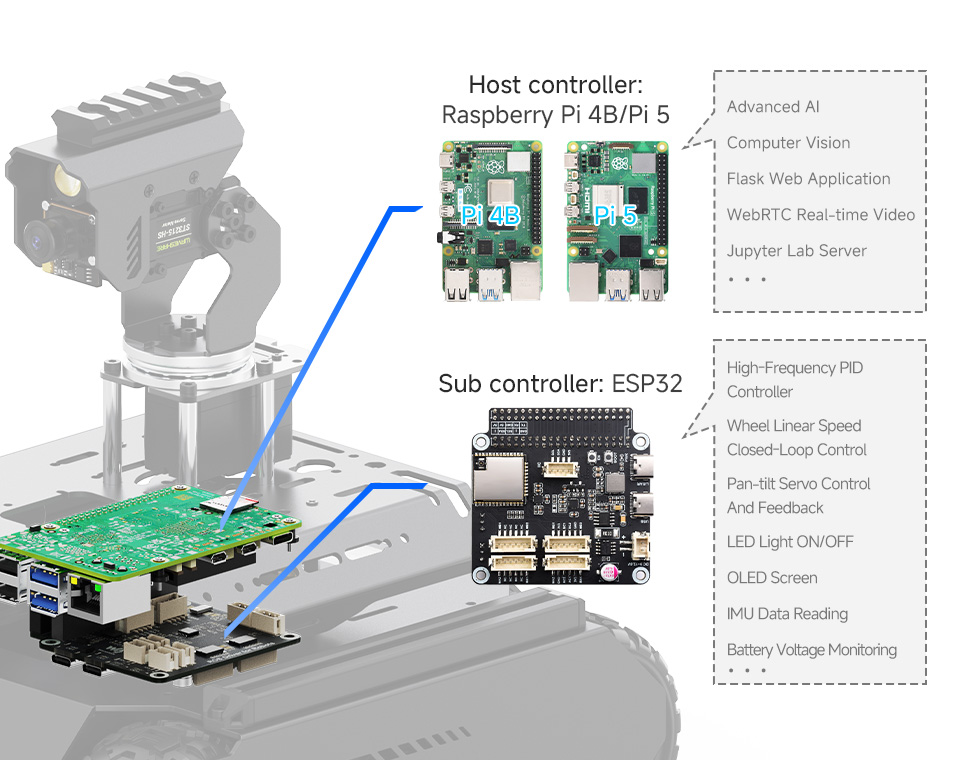

Equipped with the high-performance Raspberry Pi computer to meet the challenges of complex strategies and functions, and inspire your creativity. Adopts dual-controller design, combines the high-level AI functions of the host controller with the high-frequency basic operations of the sub controller, making every operation accurate and smooth.

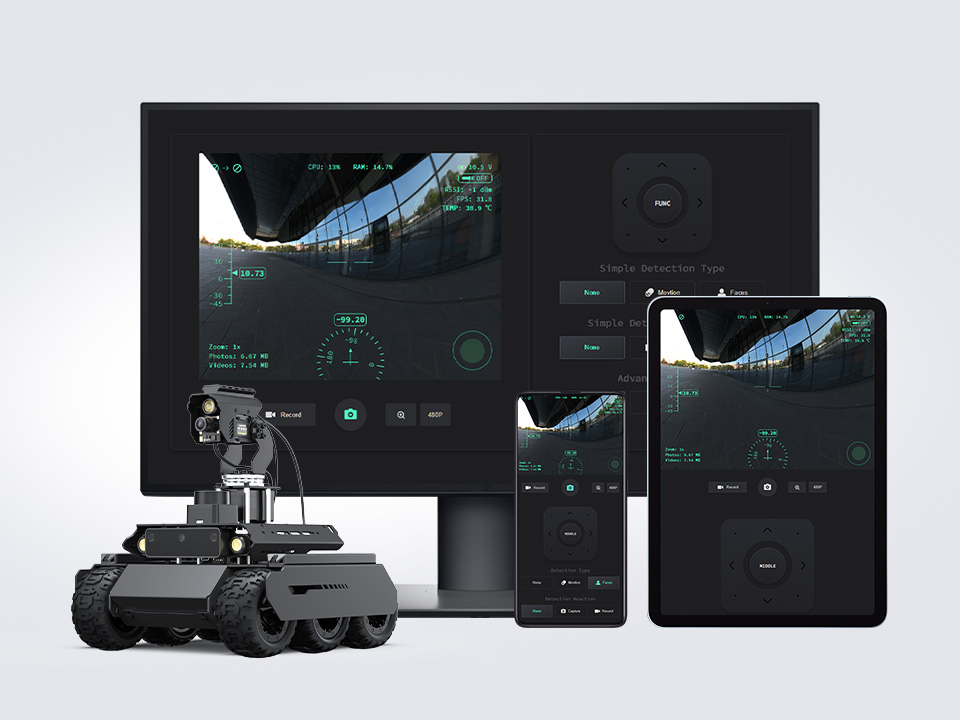

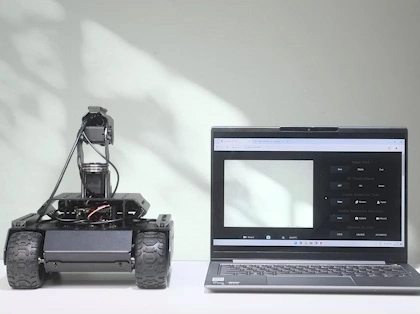

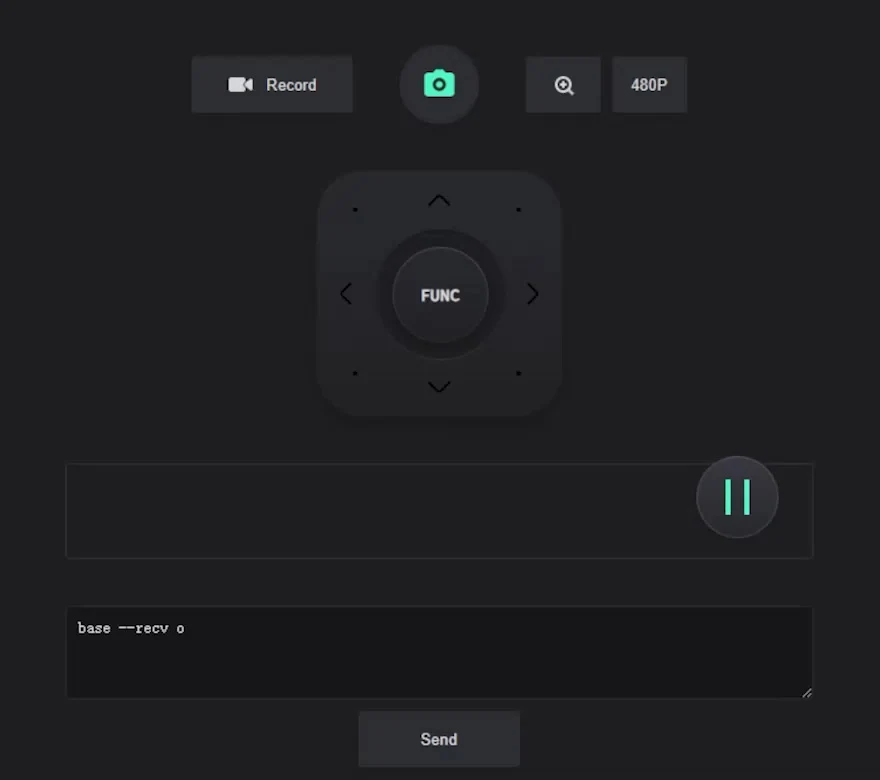

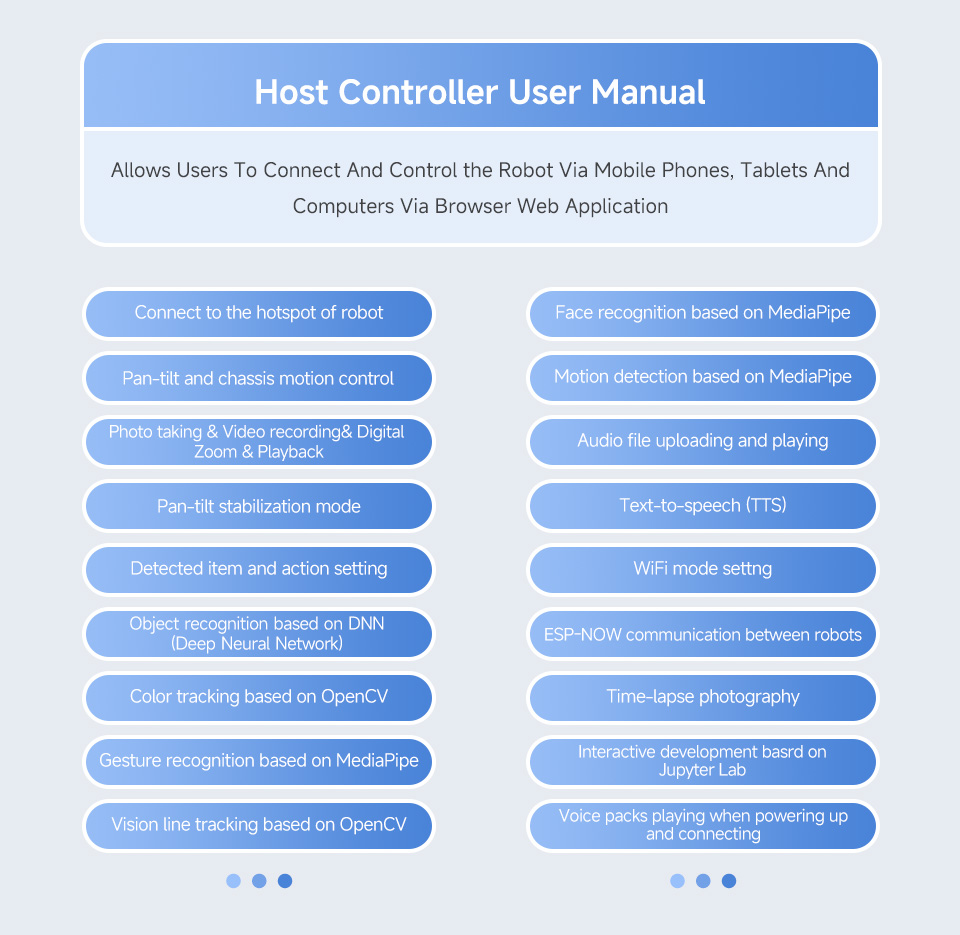

Easy to be controlled remotely via UGV Rover Web Application without downloading any software, just open your browser and start your journey. You can use the basic ROS 2 functions of the robot without installing a virtual machine on the PC. Supports high-frame rate real-time video transmission and multiple AI Computer Vision functions, the UGV Rover is an ideal platform to realize your ideas and creativity!

Optional Pan-Tilt Module (PT Version only) for better expansion potential. Options for host controller (Raspberry Pi 4B / Raspberry Pi 5), or you can choose the Acce Version if you've got a Pi. All kits are equipped with a camera, mounting accessories, TF card, cooling fan, etc.

| Model | UGV Rover PT PI4B/PI5 ROS2 Kit | UGV Rover PT PI4B/PI5 ROS2 Kit Acce | UGV Rover PI4B/PI5 ROS2 Kit | UGV Rover PI4B/PI5 ROS2 Kit Acce |

|---|---|---|---|---|

|

|

|

|

|

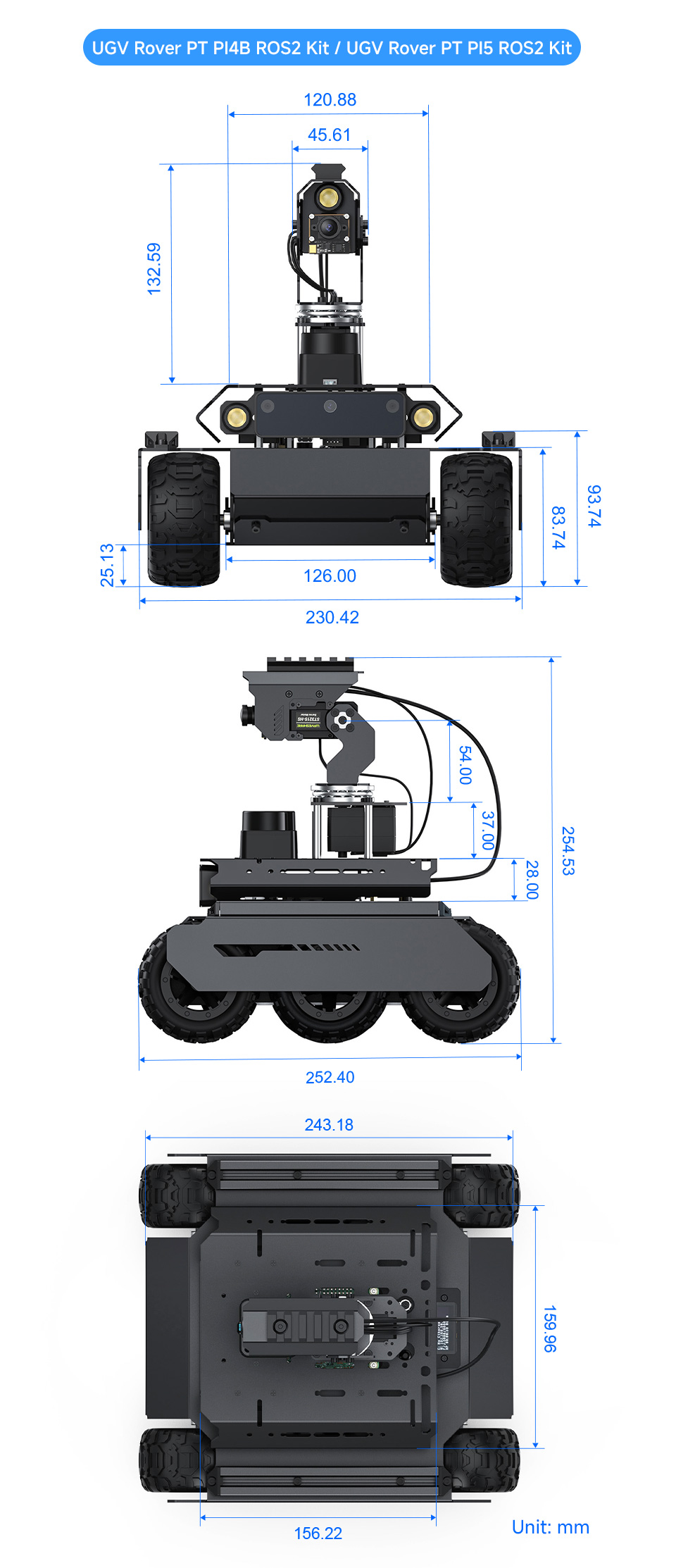

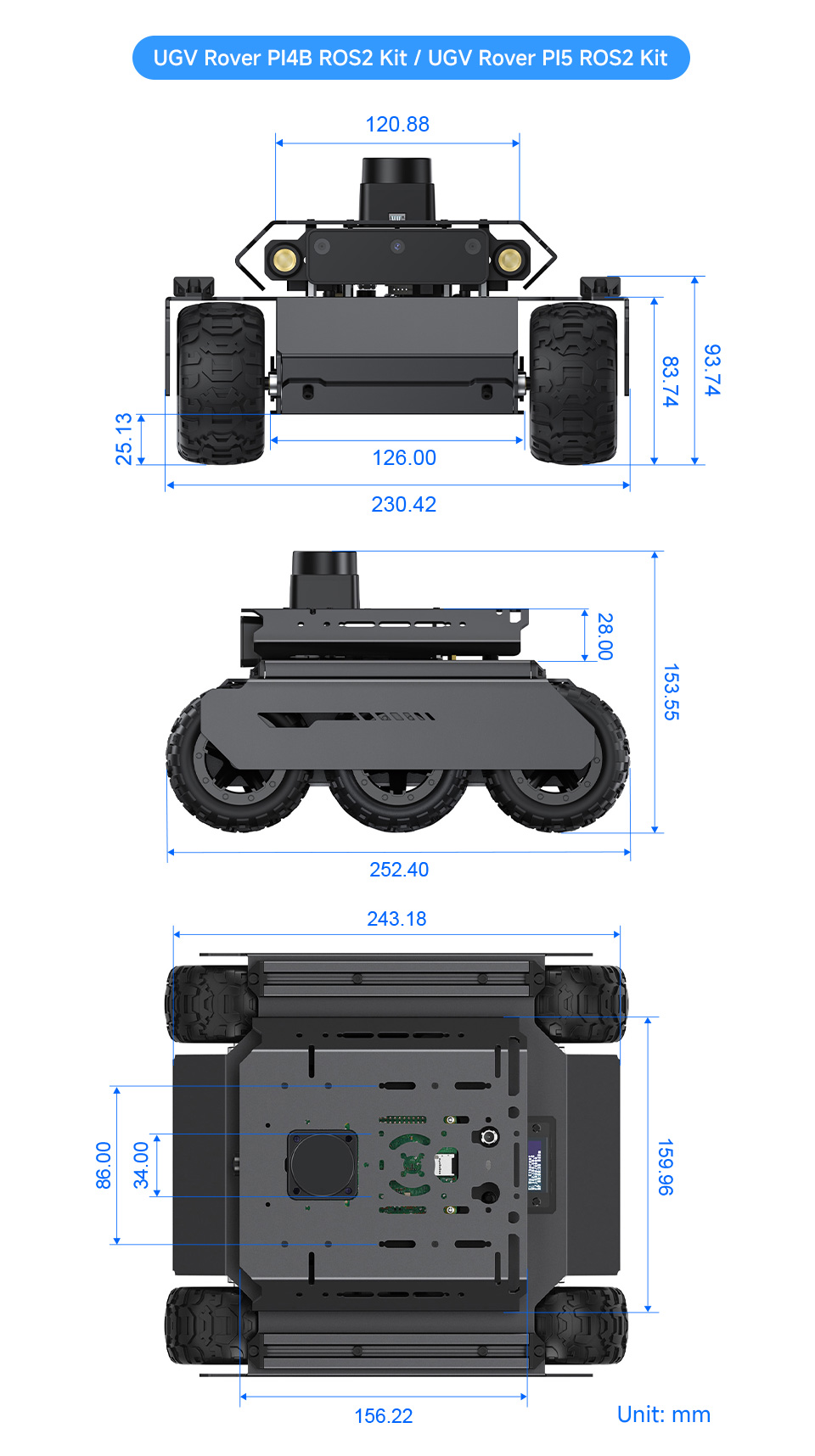

| Dimension | 230.42×252.40×254.53mm | 230.42×252.40×153.55mm | ||

| Weight | 2251±5g | 1935±5g | ||

| Chassis height | 25mm | |||

| Pan-Tilt DOF | 2 | - | ||

| Pan-Tilt SERVO TORQUE | 30KG.CM | - | ||

| Pan-Tilt SERVO | ST3215 Servo | - | ||

| Host controller | RPi 4B 4GB / RPi 5 4GB | NOT included | RPi 4B 4GB / RPi 5 4GB | NOT included |

| Host System support | Debian Bookwrom | |||

| ROS2 Version | ROS2-HUMBLE-LTS | |||

| Camera FOV | 160° | |||

| Power supply | 3S UPS Module | |||

| Battery support | 18650 lithium battery x 3 (NOT included) | |||

| Demo control methods | Web application / Jupyter Lab interactive programming | |||

| Default Max speed | 1.3m/s | |||

| Number of Driving wheels | 4 | |||

| Number of wheels | 6 | |||

| Tire diameter | 80mm | |||

| Tire width | 42.5mm | |||

| Minimum turning radius | 0M (In-situ rotation) | |||

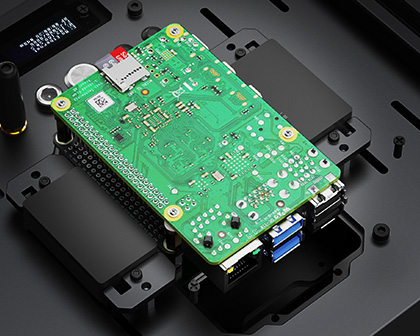

Supports Raspberry Pi 5 / Raspberry Pi 4B, with powerful computing performance to handle more complex tasks, offering more possibilities

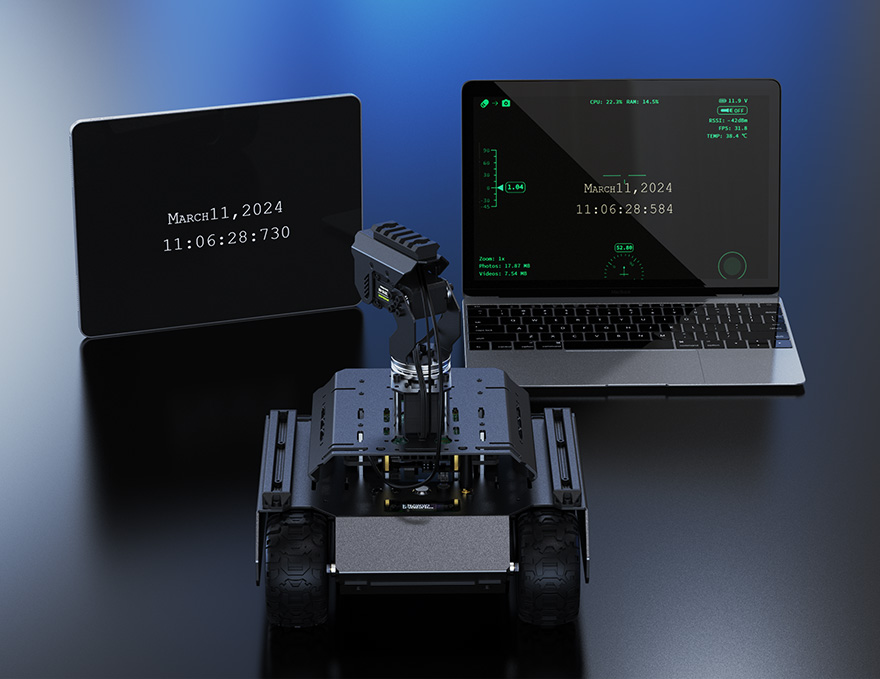

Connecting with Raspberry Pi 4B

Connecting with Raspberry Pi 5

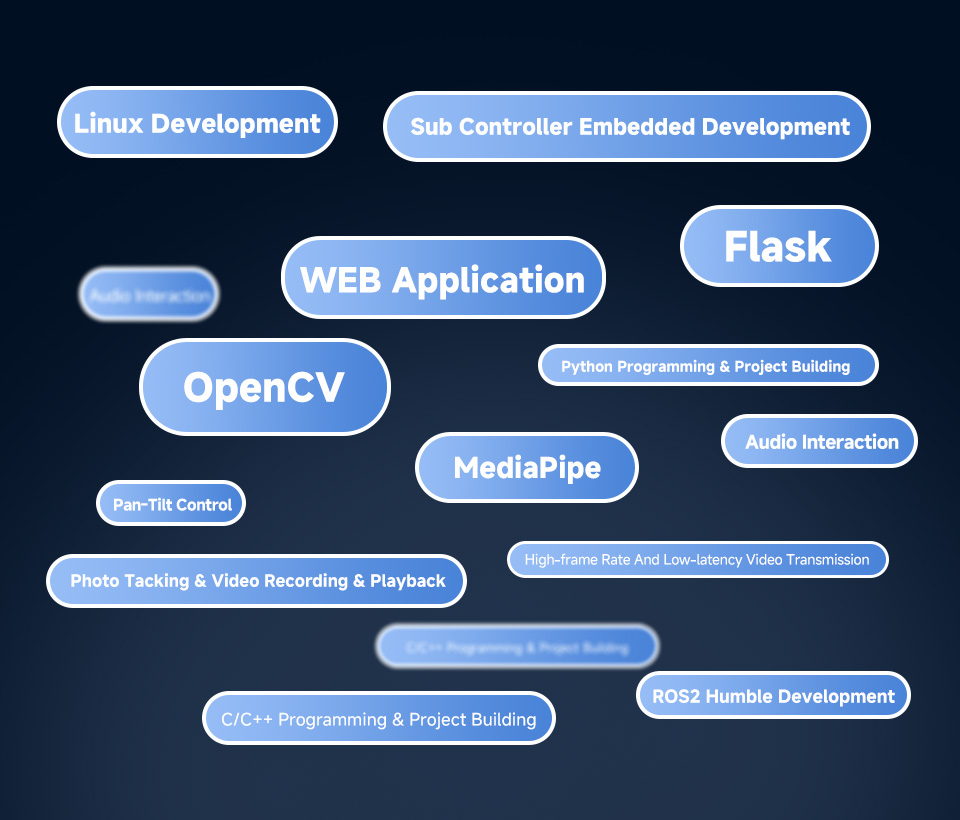

The host controller adopts Raspberry Pi for AI vision and strategy planning, and the sub controller uses ESP32 for motion control and sensor data processing

Ensures advanced decision-making performance of robot and system compatibility at the same time. Supports all AI functions of the previous AI Kit series products

equipped with 5MP 160° wide-angle camera for capturing every detail

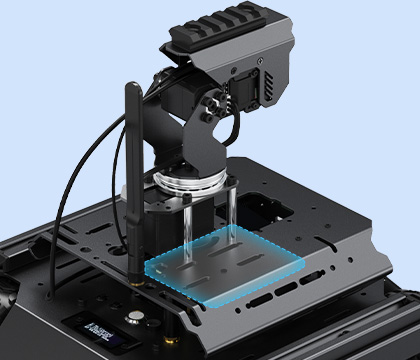

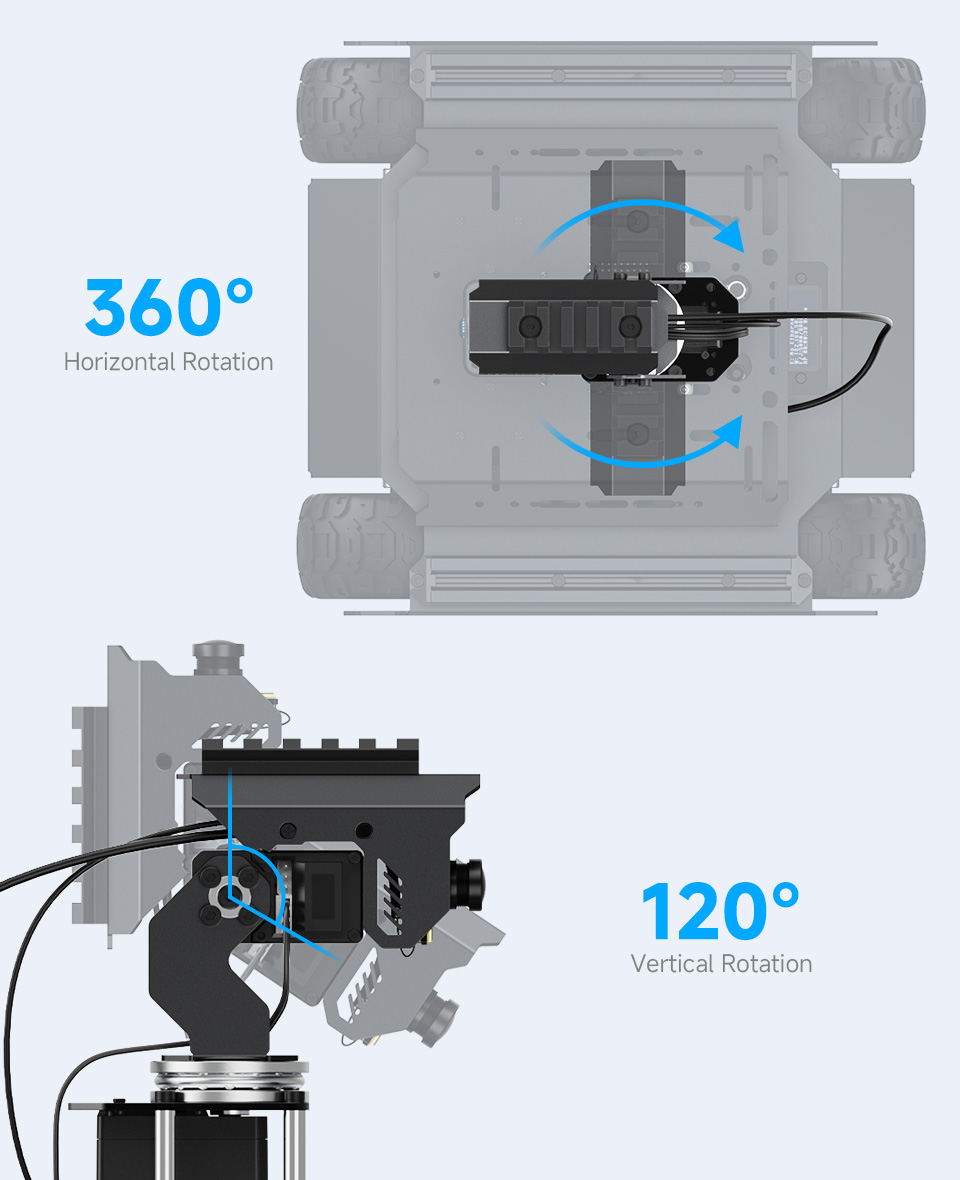

The Pan-Tilt adopts high-torque bus servos with excellent expansion potential, providing a better control experience as FPS games

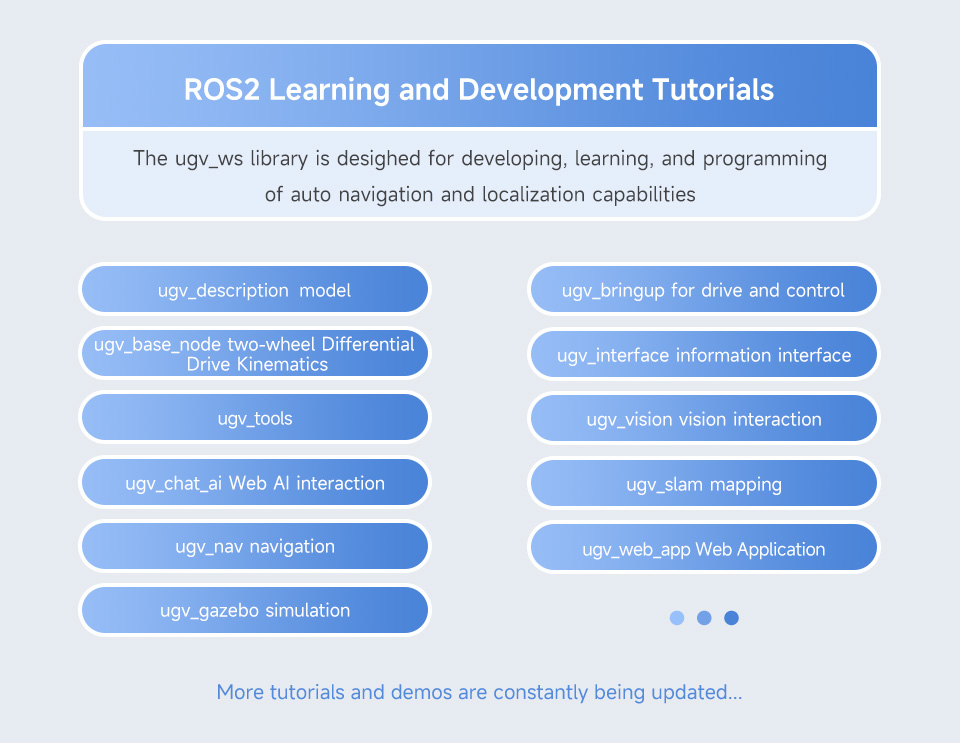

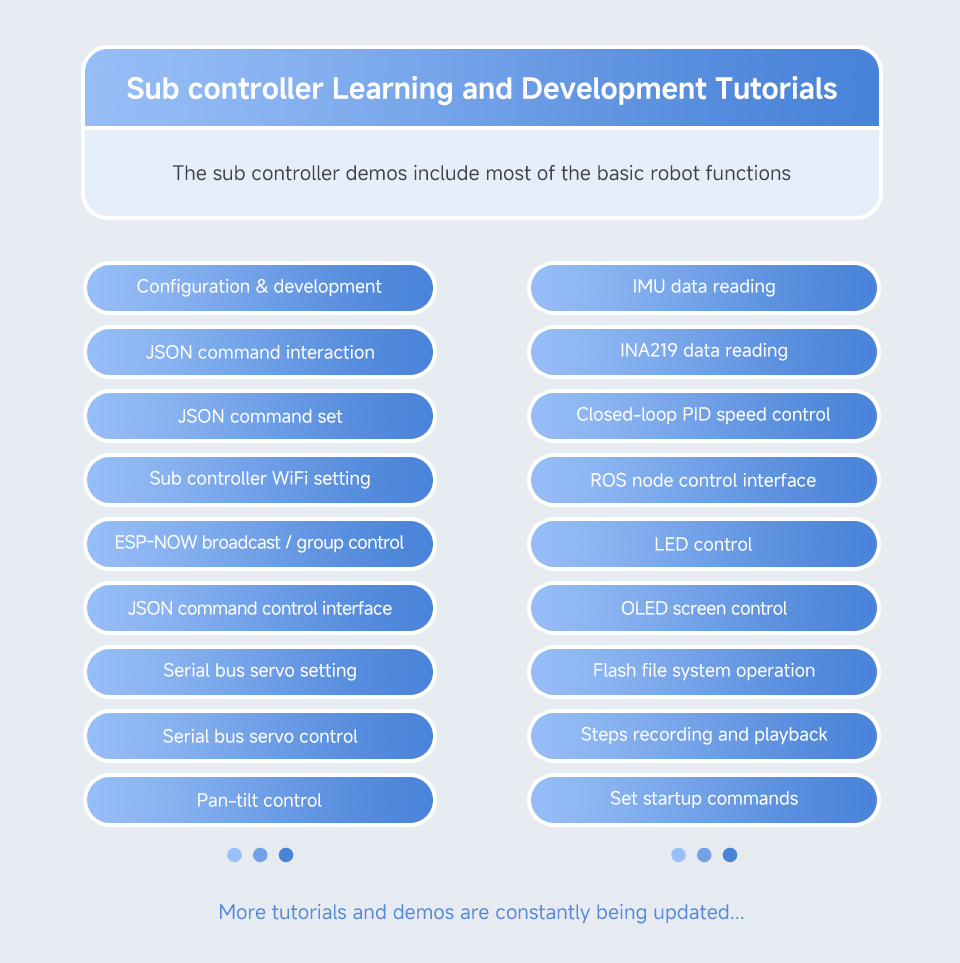

open source for all demos of host controller and sub controller, including robot description file (URDF model), sensor data processing node of sub controller, kinematic control algorithms, and various remote control nodes

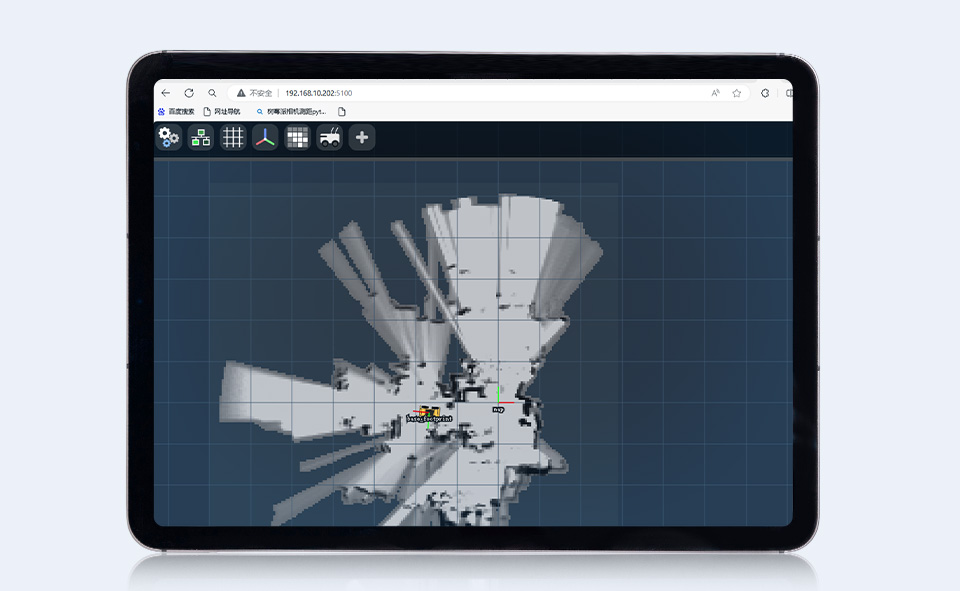

Meet the needs of mapping in different scenarios

Gmapping 2D mapping

Cartographer 2D mapping

RTAB-Map 3D mapping

Adopts multiple sensors with high cost-effectiveness and practicality

Using SLAM Toolbox to implement mapping and navigation functions simultaneously in unknown environments, simplifying the task execution process. The UGV robot can autonomously explore unknown areas and complete the mapping, suitable for unmanned applications

Adopts Large language model (LLM) technology, users can give commands to the robot by natural language, enabling it to perform tasks such as moving, mapping, and navigation

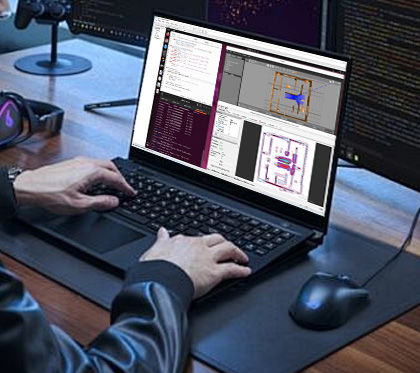

You can use the basic ROS 2 functions on the Web without installing a virtual machine on the PC, supports cross-platform operation on Android or iOS tablets. Users can simply open a browser and control the robot for moving, mapping, navigation, and other operations

Users can send control commands to the robot by a script for performing operations such as moving, obtaining the current location, and navigating to a specific point, etc. which is more convenient for secondary development

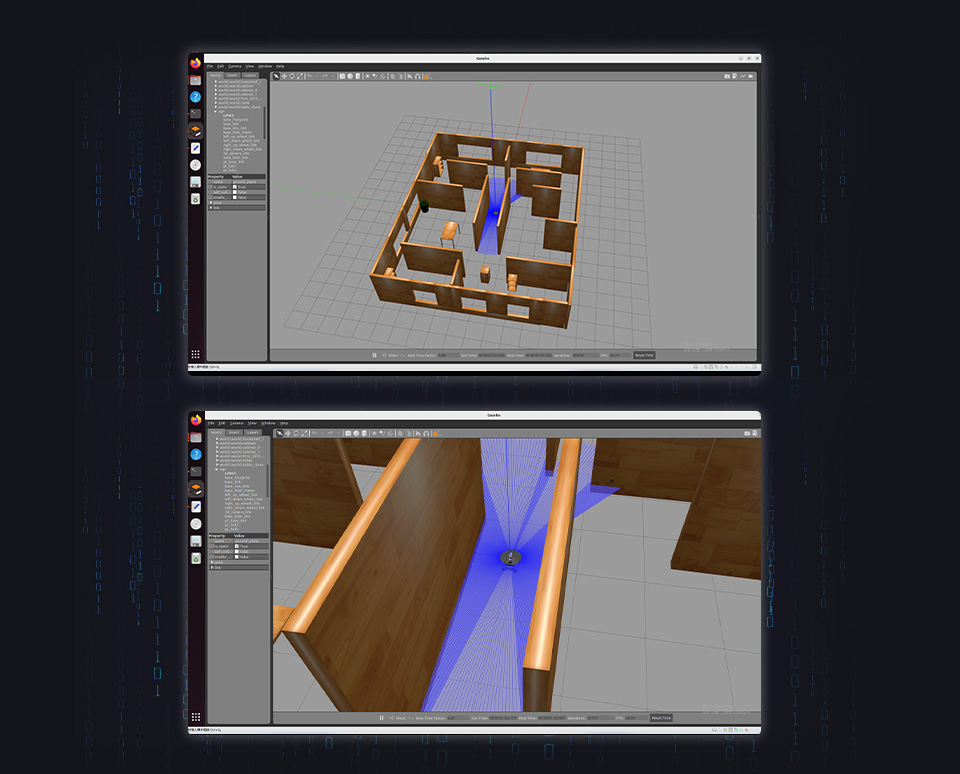

Provides Gazebo robot mode and complete functionality library for simulation debugging, helping you verify and test the system during the early stages of development

High-brightness LED light for Ensuring Clear Images In Low-Light Conditions

Comes with 21mm wide rail and 30KG.CM high precision & high-torque bus servo for tactical extension

for reference only, the accessories in the picture above are NOT included

Comes with 2 × 1020 European standard profile rails, and supports installing additional peripherals via the boat nuts to meet different needs, easily expanding the special operation scenarios

Note: Only the rail, boat nuts, and M4 screws are included, other accessories should be purchased separately.

6 wheels × 4WD design, using 6 wheels can provide a more stable platform and larger contact area, while 4WD can provide stronger power and traction to deal with various terrains and obstacles

Cross-Platform Web Application

No App installation required, allows users to connect and control the robot via mobile phones, tablets and computers via browser Web App. Supports shortcut key control such as WASD and the mouse via a PC with keyboard

Adopts Flask lightweight web application, based on WebRTC ultra-low latency real-time transmission, using Python language and easy to extend, working seamlessly with OpenCV

based on OpenCV to achieve color recognition and automatic Targeting. supports One-key Pan-Tilt control and automatic LED lighting, allows expansion for more functions

Automatic picture or video capturing

based on OpenCV to achieve Face recognition, supports automatic photo taking or video recording once a face is recognized

supports recognizing for many common objects with the default model

AI Interaction with body language

Combines OpenCV and MediaPipe to realize gesture control of Pan-Tilt and LED

Gesture control for photo taking

LED ON/OFF and blacklight control

Complex Video Processing Tasks

MediaPipe is an open-source framework developed by Google for building cross-platform multimedia processing pipelines, provides a set of pre-built components and tools, its high-performance processing capability enables the robot to respond to and process complex multimedia inputs such as real-time video analytics.

Face Recognition

Attitude Detection

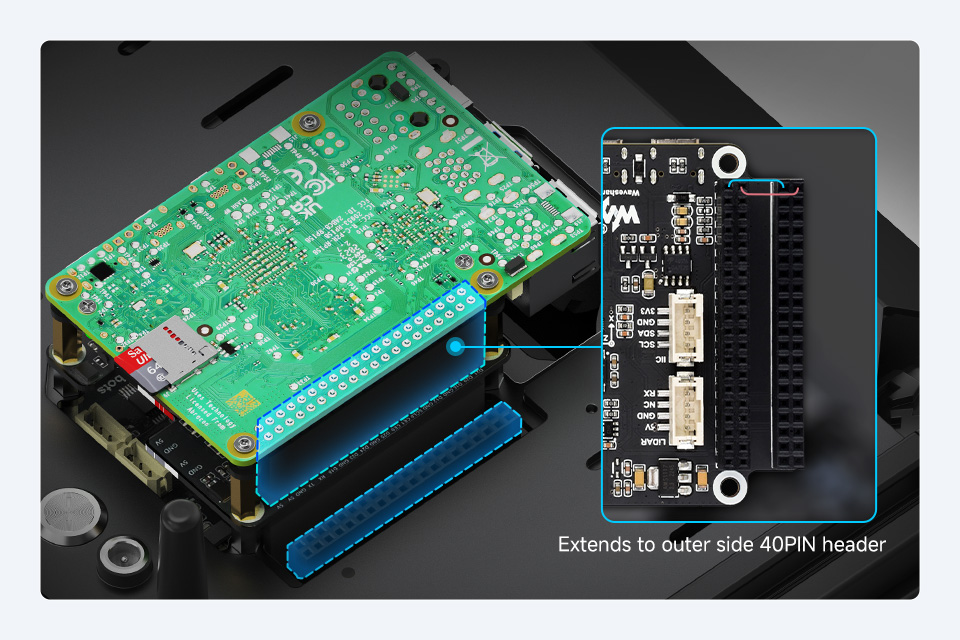

The robot only occupies the URAT interface of the Raspberry Pi GPIO for communication, adapting outer side 40PIN header of the driver board for expanding more peripherals and functions

Real-time monitoring the operating status of the robot

Multiple Functions for Easier Expansion

Quick to set up, easy to expand

Easily customize and add new functions without Modifying front-end code

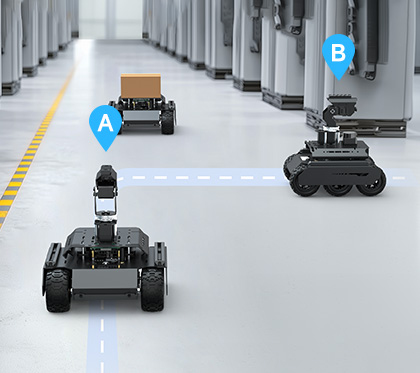

between robots

Based on ESP-NOW communication protocol, multiple robots can communicate with each other without IP or MAC address, achieving multi-device collaboration with 100-microsecond low-latency communication

Comes with a wireless gamepad, making robot control more flexible. You can connect the USB receiver to your PC and control the robot remotely via the Internet. Provides open source demo for customizing your own interaction method

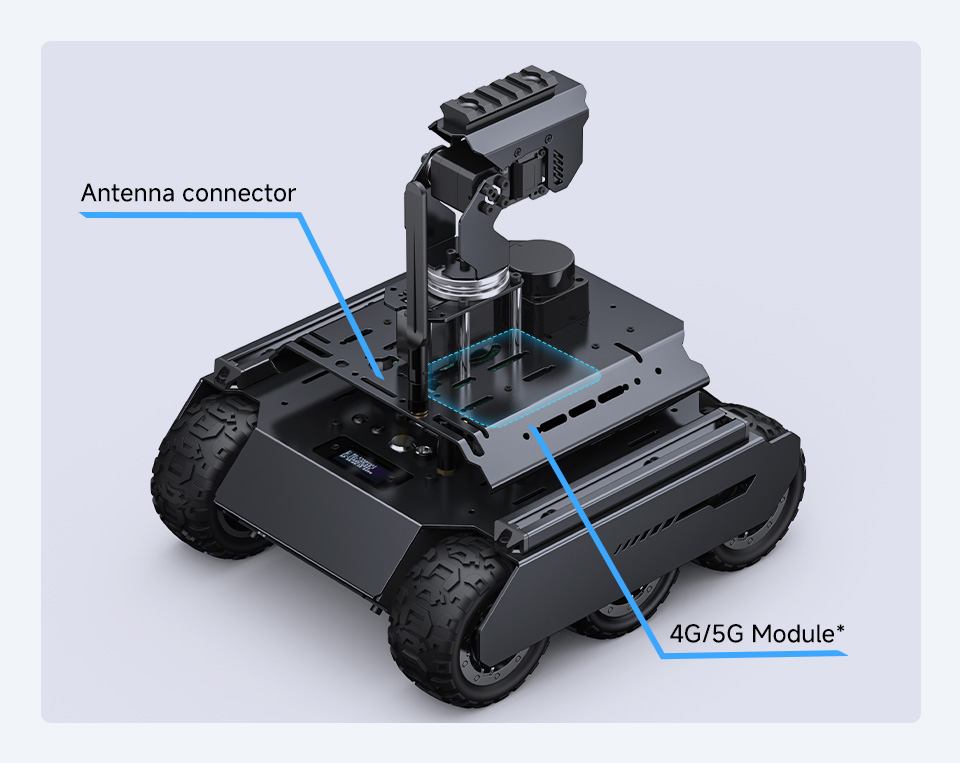

Supports installing 4G/5G module* for the application scenarios without WiFi

* You may need to use Tunneling Service such as Ngrok, Cpolar, or LocalTunnel to expose the local network service of the robot (Flask application) to the Internet so that you can control the robot from anywhere.

- Our web application demos are based on WebRTC for real-time video transmission.

- WebRTC (Web Real-Time Communications) is a technology that enables web applications and sites to establish peer-to-peer connection and capture optionally stream audio and/or video media, as well as to exchange arbitrary data between browsers without requiring an intermediary.

- We provide comprehensive Ngrok tutorials* to help you get started quickly and realize robot control across the internet.

* Provides the usage tutorials of Ngrok only, we do not provide any Ngrok accounts or Servers. You can follow our tutorial to open your own Ngrok service, or choose other tunneling services according to your needs.

If you have a spare phone, you can install it on the robot via holder as below, using the phone to create a hotspot for the robot and achieving remote control across the internet at a lower cost

* Comes with a smartphone holder with 1/4″ screw in the package

Develop while you learn

supports accessing Jupyter Lab via devices such as mobile phones and tablets to read the tutorials and edit the code on the web page, making development easier

We Provide Complete Tutorials And demos To Help Users Get Started Quickly For Learning and Secondary Development

Full dual-controller technology stack

* Resources for different product may vary, please check the wiki page to confirm the actually provided resources.

Quick Overview

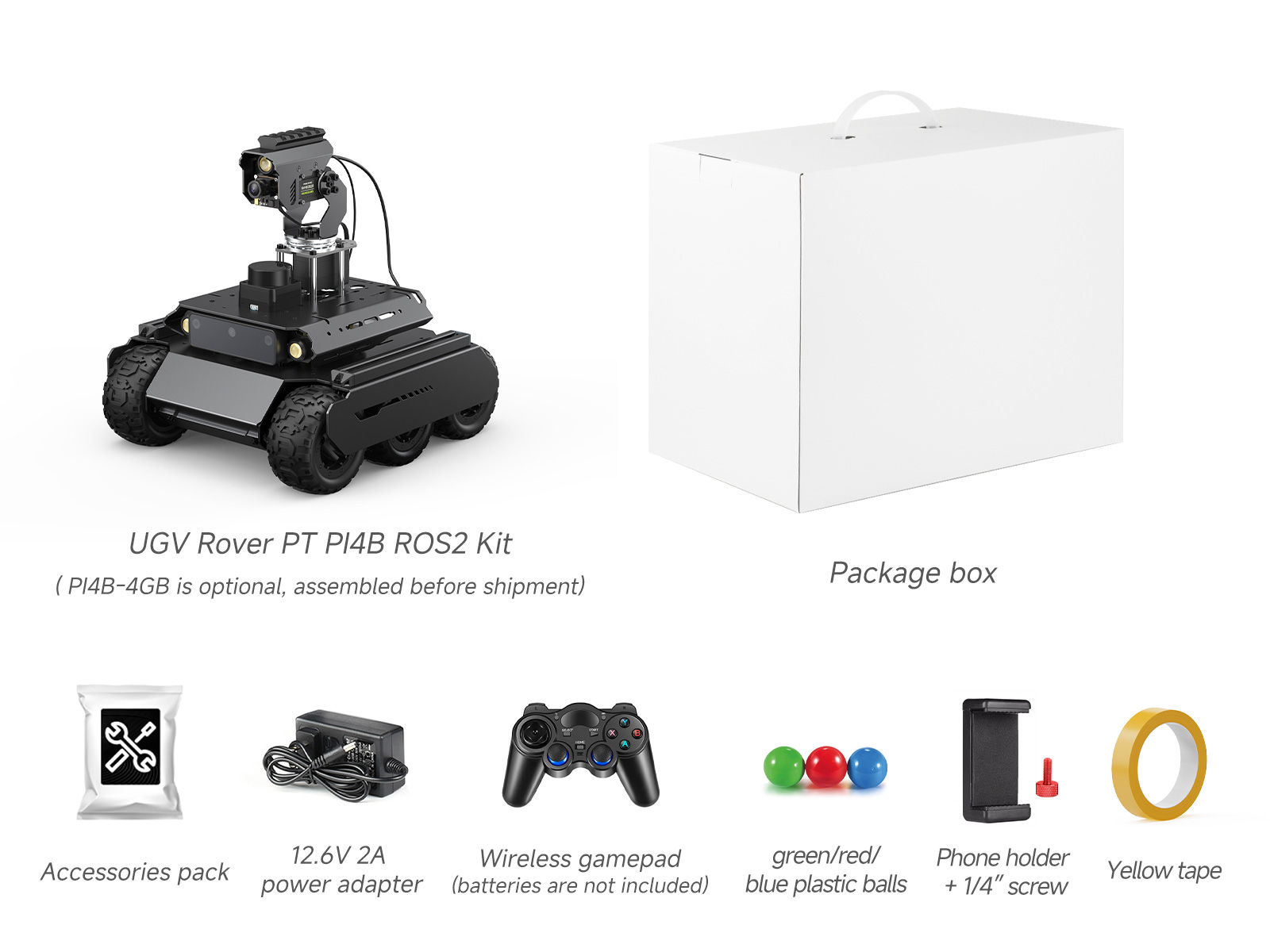

UGV Rover PT PI4B ROS2 Kit

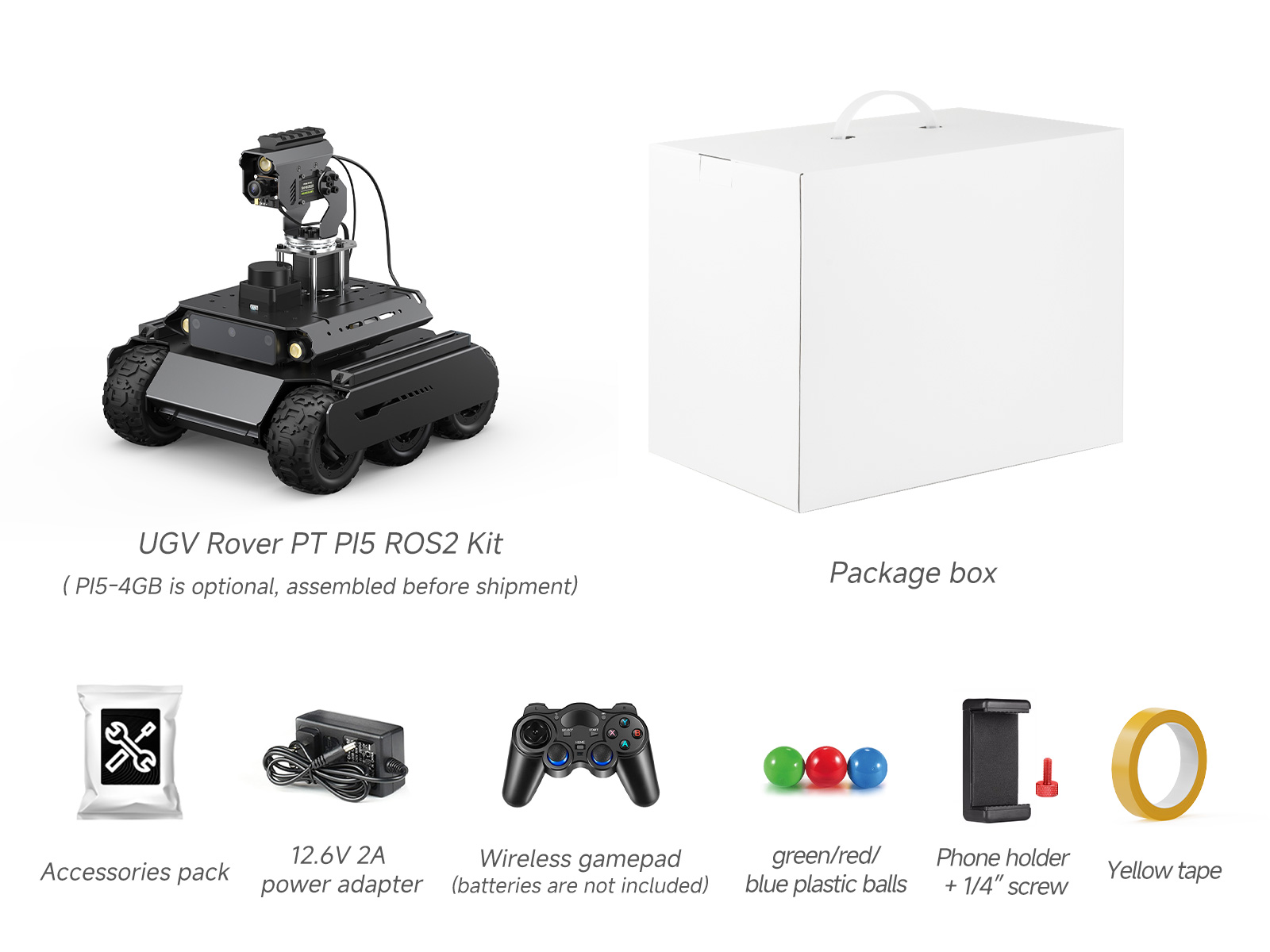

UGV Rover PT PI5 ROS2 Kit

UGV Rover PI4B ROS2 Kit

UGV Rover PI5 ROS2 Kit